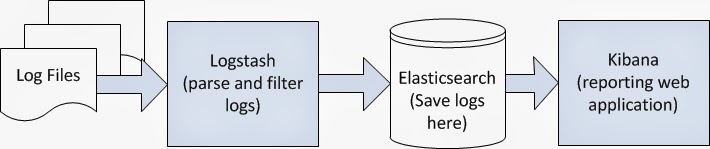

If you want to monitor logs from multiple sources, for example tomcat, IIS and so on from a single location, logstash is your friend. The way logstash works is that it takes log data from multiple sources and formats all of them into the same format and pushes that data for storage into elastic search. You then use Kibana to monitor that data.

Open C:\Program Files\elasticsearch-1.3.4\config\elasticsearch.yml in notepad

Change clustername to whatever you like. I set mine to VivekLocalMachine

Double click C:\Program Files\elasticsearch-1.3.4\bin\elasticsearch.bat to start up elastic search service.

First we need to create a *.conf file (you can give it any name). This file is what will tell logstash where to read the data from and where to send that data to. I am going to create a file called logstash.conf in C:\Program Files\logstash-1.4.2\bin.

Filter: This tells logstash what you want to do to the data before you output it into your log store (in our case elasticsearch). This accepts regular expressions. Most people use precreated regular expressions called grok, instead of writing their own regular expressions to parse log data. The complete list of filters you can use can be seen here.

Output: This tells logstash where to putput this filtered data to. We are going to output it into elasticsearch. You can have multiple outputs if you want.

This is how my logstash.conf looks like

input {

file {

path => ["C:/Program Files/apache-tomcat-7.0.55/logs/*.log"]

}

}

filter {

}

output{

elasticsearch {

cluster=>"VivekLocalMachine"

port => "9200"

protocol => "http"

}

}

The cluster name should match what you set in C:\Program Files\elasticsearch-1.3.4\config\elasticsearch.yml

Many times when I made changes to my logstash.conf , I have had to restart the machine that hosted logstash, for these changes to take effect. Restarting logstash didn't always help.

In the command prompt cd into

C:\Program Files\logstash-1.4.2\bin

Then run this

Now you should start seeing data in real time in your kibana website. For me that url is

http://localhost:8080/kibana-3.1.1/index.html

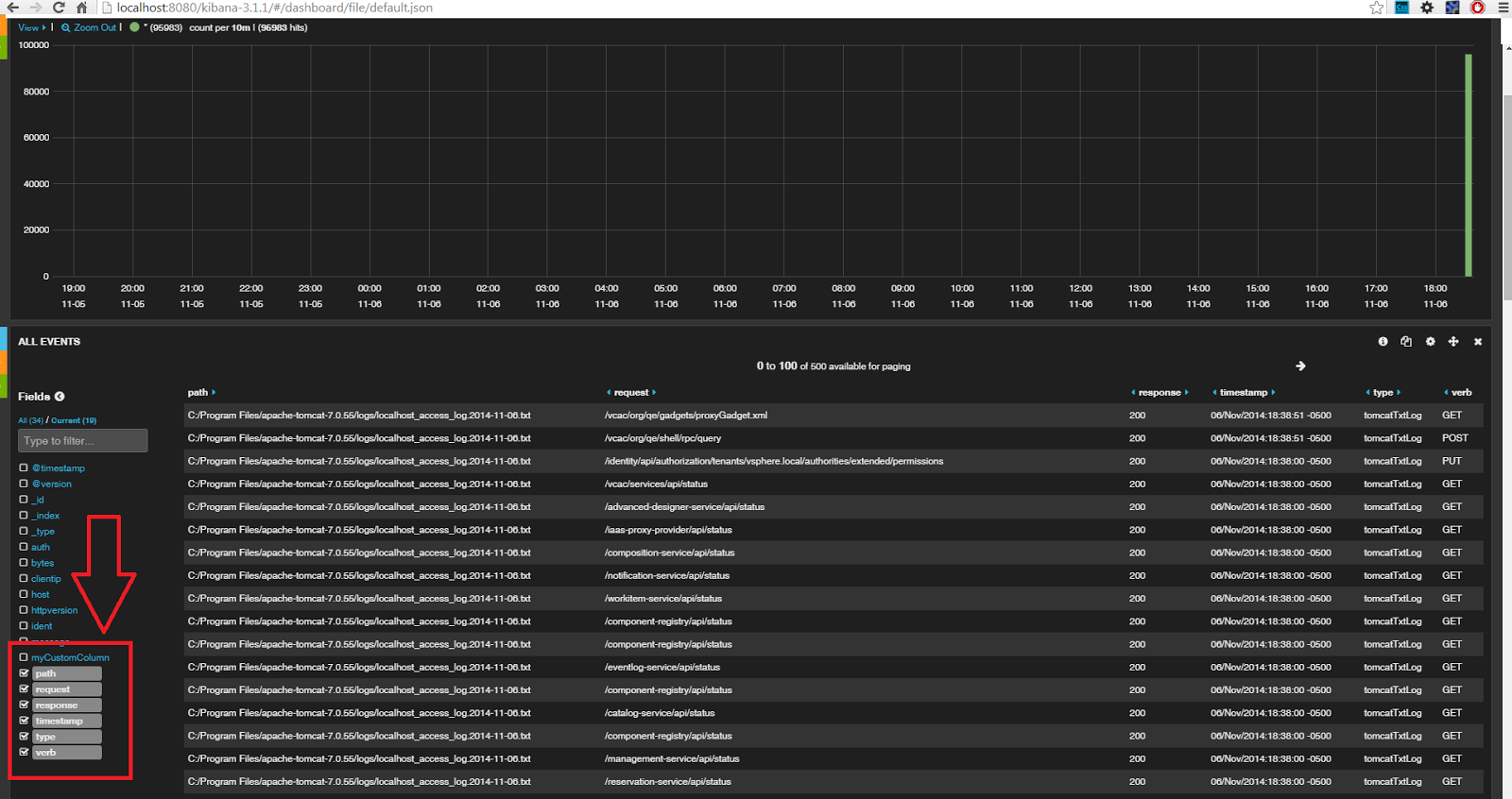

This is how the kibana output looks like with the filter applied.

Use this tool to play around with grok filters.

To test the example above put these values into the grok Debugger

To check the indices created by logstash in elastic search go to this url

http://localhost:9200/_cat/indices?v

Check this for a more detailed explanation on logstash filters.

Check this for more on logstash configuration files.

In this article we will just look at a very basic example to help you get started. Do these steps to follow along

Prerquisites

- You need jdk (I have jdk1.7.0_67)

- You need to have apache tomcat installed

Steps

Install logstash

Unzip that file and copy it into C:\Program Files

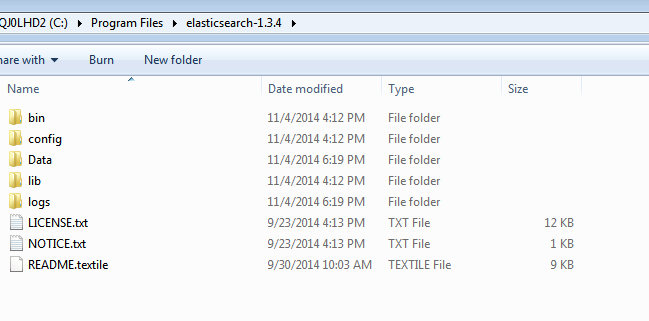

Install and start up Elastic search

Unzip it and copy it into C:\Program Files\elasticsearch-1.3.4

Open C:\Program Files\elasticsearch-1.3.4\config\elasticsearch.yml in notepad

Change clustername to whatever you like. I set mine to VivekLocalMachine

################################### Cluster ###################################

# Cluster name identifies your cluster for auto-discovery. If you're running

# multiple clusters on the same network, make sure you're using unique names.

#

cluster.name: VivekLocalMachine

# Cluster name identifies your cluster for auto-discovery. If you're running

# multiple clusters on the same network, make sure you're using unique names.

#

cluster.name: VivekLocalMachine

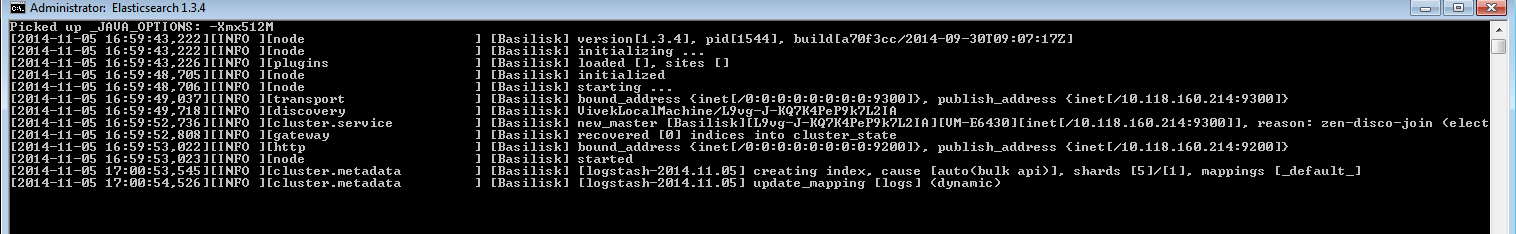

Double click C:\Program Files\elasticsearch-1.3.4\bin\elasticsearch.bat to start up elastic search service.

Install Kibana and point it to the elastic search service

Copy Kibana to your tomcat webapps folder, for me that was C:\Program Files\apache-tomcat-7.0.55\webapps

Overwrite

C:\Program Files\apache-tomcat-7.0.55\webapps\kibana-3.1.1\app\dashboards\default.json

with the contents of

C:\Program Files\apache-tomcat-7.0.55\webapps\kibana-3.1.1\app\dashboards\logstash.json

Overwrite

C:\Program Files\apache-tomcat-7.0.55\webapps\kibana-3.1.1\app\dashboards\default.json

with the contents of

C:\Program Files\apache-tomcat-7.0.55\webapps\kibana-3.1.1\app\dashboards\logstash.json

Open C:\Program Files\apache-tomcat-7.0.55\webapps\kibana-3.1.1\config.js in notepad.

Check the value of elasticsearch. That is where Kibana would be looking for elastic search database.

Open kibana web ui in browser. You can open it directly from tomcat manager. For me that url was

http://localhost:8080/kibana-3.1.1/#/dashboard/file/default.json

Set logstash configuration to read tomcat log and pump that data into elastic search

First we need to create a *.conf file (you can give it any name). This file is what will tell logstash where to read the data from and where to send that data to. I am going to create a file called logstash.conf in C:\Program Files\logstash-1.4.2\bin.

Logstash conf file consists of three parts shown below

input{

}

filter

{

}

output

{

}

Input: This tells logstash where the data is coming from. For example File, eventlog, twitter, tcp and so on. All the supported inputs can be found here.

input{

}

filter

{

}

output

{

}

Input: This tells logstash where the data is coming from. For example File, eventlog, twitter, tcp and so on. All the supported inputs can be found here.

Filter: This tells logstash what you want to do to the data before you output it into your log store (in our case elasticsearch). This accepts regular expressions. Most people use precreated regular expressions called grok, instead of writing their own regular expressions to parse log data. The complete list of filters you can use can be seen here.

Output: This tells logstash where to putput this filtered data to. We are going to output it into elasticsearch. You can have multiple outputs if you want.

This is how my logstash.conf looks like

input {

file {

path => ["C:/Program Files/apache-tomcat-7.0.55/logs/*.log"]

}

}

filter {

}

output{

elasticsearch {

cluster=>"VivekLocalMachine"

port => "9200"

protocol => "http"

}

}

The cluster name should match what you set in C:\Program Files\elasticsearch-1.3.4\config\elasticsearch.yml

Many times when I made changes to my logstash.conf , I have had to restart the machine that hosted logstash, for these changes to take effect. Restarting logstash didn't always help.

Start up logstash

In the command prompt cd into

C:\Program Files\logstash-1.4.2\bin

Then run this

logstash.bat agent -f logstash.conf

Now you should start seeing data in real time in your kibana website. For me that url is

http://localhost:8080/kibana-3.1.1/index.html

Adding a filter in logstash conf file

Lets take this example one step further by adding a filter in the C:\Program Files\logstash-1.4.2\bin\logst

We will be adding a "grok" filter. The purpose of adding this filter is to format the log text into a format that is easier to read. Log stash comes with many pre created regular expressions in

C:\Program Files\logstash-1.4.2\patterns\

All these regular expressions are given names in these patterns files. We will be using those names in our grok filter.

Given below in the logstash.conf file with a filter applied.

Given below in the logstash.conf file with a filter applied.

input {

file {

path => ["C:/Program Files/apache-tomcat-7.0.55/logs/*.txt"]

type => ["tomcatTxtLog"]

}

}

filter {

grok {

match => ["message", "%{COMMONAPACHELOG:myCustomColumn}"]

}

}

output{

elasticsearch {

cluster=>"VivekLocalMachine"

port => "9200"

protocol => "http"

}

}

file {

path => ["C:/Program Files/apache-tomcat-7.0.55/logs/*.txt"]

type => ["tomcatTxtLog"]

}

}

filter {

grok {

match => ["message", "%{COMMONAPACHELOG:myCustomColumn}"]

}

}

output{

elasticsearch {

cluster=>"VivekLocalMachine"

port => "9200"

protocol => "http"

}

}

This is how the kibana output looks like with the filter applied.

Use this tool to play around with grok filters.

To test the example above put these values into the grok Debugger

0:0:0:0:0:0:0:1 - - [30/Oct/2014:17:08:41 -0400] "GET / HTTP/1.1" 200 11418

%{COMMONAPACHELOG}

To check the indices created by logstash in elastic search go to this url

http://localhost:9200/_cat/indices?v

Check this for a more detailed explanation on logstash filters.

Check this for more on logstash configuration files.

I store my log files with current date prefixed to it. So basically i have 100's of log file. Is there any expression that can be added file "path" to push files with current date?

ReplyDelete