The easiest way to get a return value from a thread in C# is to start it as a Task.

public static void Main(String[] args) { Task<int> task = Task.Run(() => Add(1, 2)); int result = task.Result; } public static int Add(int a, int b) { return a + b; }

You can also add multiple lines of code to the task

Task<int> task = Task.Run(() => { Console.WriteLine("Adding.."); return Add(1, 2); });

Note here that int result = task.Result; blocks the main thread until the result is obtained.

Catching Exceptions in tasks

try { Task<int> task = Task.Run(() => Add(1, 2)); task.Wait(); } catch (AggregateException ex) { Console.WriteLine(ex.InnerException.Message); }

Asynchronously running a thread

You don't have to wait for the result. You can kick off the process to fetch the result and then continue doing something else. You can then run some other code when the result is finally available.

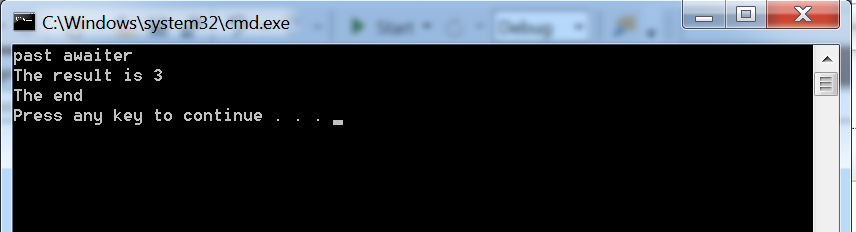

using System; using System.Runtime.CompilerServices; using System.Threading; using System.Threading.Tasks; internal class Test { public static void Main(String[] args) { Task<int> task = Task.Run(() => Add(1, 2)); TaskAwaiter<int> awaiter = task.GetAwaiter(); awaiter.OnCompleted(() => { Console.WriteLine("The result is {0}", awaiter.GetResult()); }); Console.WriteLine("past awaiter"); Thread.Sleep(20000); Console.WriteLine("The end"); } public static int Add(int a, int b) { for (int i = 0; i < int.MaxValue; i++) { int x = 10; } return a + b; } }